How to get status of kubernates node using golang – How to get status of Kubernetes node using Golang details a comprehensive methodology for accessing and interpreting Kubernetes node status information within Go applications. This involves interacting with the Kubernetes API, extracting crucial node attributes like readiness, liveness, and resource allocation, and implementing robust error handling and monitoring strategies. Understanding Kubernetes node status is critical for maintaining cluster health, automating tasks, and facilitating proactive troubleshooting.

The process begins with establishing a connection to the Kubernetes cluster using the `client-go` library. Subsequently, code examples demonstrate how to retrieve a list of all nodes, extract specific status attributes, and handle potential errors. Furthermore, this document emphasizes the significance of continuous monitoring and presents various approaches for efficient polling, along with best practices for error handling and resilience.

Finally, examples of output formatting and integration with other systems like Grafana dashboards are included.

Introduction to Kubernetes Node Status Retrieval

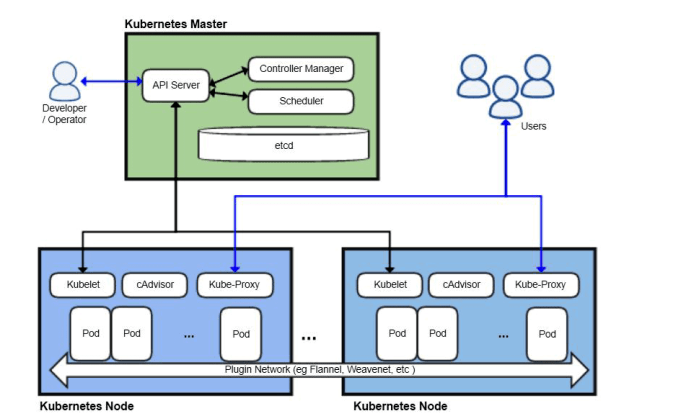

Kubernetes, a powerful container orchestration platform, relies on the health and status of its nodes to function effectively. Understanding the status of each node is crucial for ensuring optimal resource allocation, efficient application deployment, and overall cluster stability. Node status encompasses a wide range of information, providing insights into the node’s readiness, operational capacity, and resource availability. This detailed analysis enables proactive maintenance and swift problem resolution, guaranteeing the reliability of the entire system.Node status encompasses a variety of essential details.

The information reveals not just the node’s current operational state, but also its capacity to execute tasks and host applications. This intricate information is crucial for numerous tasks, from ensuring application availability to dynamically scaling resources. By examining node status, administrators and applications can gain insights into the node’s ability to perform its assigned roles.

Node Status Information Types

Node status information encompasses a variety of details. These details are vital for understanding the node’s current state and capacity. Readiness, liveness, and allocatable resources are all critical elements of node status, each offering unique insights. Readiness signifies the node’s ability to accept and process requests. Liveness indicates the node’s operational status, providing confirmation that the node is functioning correctly.

Allocatable resources represent the amount of computational power and memory available for allocation to containers and pods. These details allow applications to make informed decisions based on the node’s current capabilities.

Typical Use Cases in Go Applications, How to get status of kubernates node using golang

Retrieving node status is essential for a variety of Go applications. These applications rely on node status to ensure efficient resource utilization and optimal application performance. Monitoring tools often use node status information to alert administrators to potential issues, while application deployment scripts can utilize node status to determine appropriate deployment targets. This information enables a streamlined and dynamic approach to managing Kubernetes clusters.

Furthermore, the information can be used to dynamically adjust resource allocation, ensuring applications have the necessary resources to operate optimally.

Key Kubernetes API Resources

Understanding the Kubernetes API resources related to nodes is essential for effectively retrieving and interpreting node status. These resources provide structured data about nodes and their associated properties.

| Resource | Description |

|---|---|

node |

Represents a physical or virtual machine in the cluster. It encapsulates the node’s identity and associated metadata. |

nodeStatus |

Contains details about the node’s current state, including conditions like readiness and liveness, allocatable resources, and other crucial metrics. |

nodeCondition |

A specific part of the nodeStatus, detailing the conditions that affect the node’s operational state. It offers specific details about the node’s status. |

Utilizing the Kubernetes API in Go

Accessing and manipulating Kubernetes resources is crucial for automating tasks and managing the cluster. This necessitates interacting with the Kubernetes API, a standardized interface for communication. Go’s `client-go` library provides a powerful framework for this interaction, enabling developers to write applications that integrate seamlessly with the cluster’s functionalities.The `client-go` library is a cornerstone for interacting with the Kubernetes API in Go.

It abstracts the complexities of the API, providing a cleaner, more manageable interface for developers. This abstraction allows for focused development on application logic without getting bogged down in the intricacies of the underlying API requests. This methodology promotes code maintainability and facilitates efficient integration into various deployment pipelines.

Creating a Kubernetes Clientset

The first step in interacting with the Kubernetes API involves constructing a `clientset`. This clientset acts as the intermediary between your application and the API server, handling authentication and communication.A `clientset` is created by configuring various options, including the Kubernetes API server address, authentication method, and desired API versions. This configuration ensures that your application connects to the correct cluster and interacts with the API using the expected protocol.

Proper configuration is critical for successful API interaction.

Authenticating with the Cluster

Authentication is paramount for secure access to the Kubernetes cluster. Various authentication methods are available, including service accounts, bearer tokens, and client certificates. The choice depends on the security requirements of the application and the cluster configuration.The `client-go` library supports these authentication methods, enabling secure interaction with the cluster. For example, using a service account, your application automatically leverages the account’s permissions to access specific resources within the cluster.

Code Snippet for Constructing a Clientset

“`Goimport ( “context” metav1 “k8s.io/apimachinery/pkg/apis/meta/v1” “k8s.io/client-go/kubernetes” “k8s.io/client-go/rest” “k8s.io/client-go/tools/clientcmd”)func createClientset() (*kubernetes.Clientset, error) // Use in-cluster config if available, otherwise use kubeconfig. config, err := rest.InClusterConfig() if err != nil config, err = clientcmd.BuildConfigFromFlags(“”, “/path/to/kubeconfig”) // Replace with your kubeconfig path. if err != nil return nil, err clientset, err := kubernetes.NewForConfig(config) if err != nil return nil, err return clientset, nil“`This code snippet demonstrates the process of constructing a `clientset`. The `InClusterConfig` function attempts to retrieve the configuration from within the cluster.

If that fails, it defaults to using a `kubeconfig` file.

Getting a List of All Nodes

Retrieving a list of all nodes in the cluster involves using the `coreV1` API. This API provides access to essential cluster components, including nodes.“`Gofunc getNodes(clientset

kubernetes.Clientset) ([]corev1.Node, error)

nodes, err := clientset.CoreV1().Nodes().List(context.TODO(), metav1.ListOptions) if err != nil return nil, err return nodes.Items, nil“`This function demonstrates how to fetch all nodes. The function leverages the `List` method of the `Nodes` resource, handling potential errors during the request.

Retrieving Node Name and Status

Once you have a list of nodes, you can iterate through them to extract the node’s name and status. This information is critical for monitoring cluster health and resource availability.“`Gofunc printNodeDetails(nodes []corev1.Node) for _, node := range nodes fmt.Printf(“Node Name: %s, Status: %s\n”, node.Name, node.Status.Conditions[0].Type) “`This function displays the node’s name and status for each node in the list. It extracts the status information, ensuring the output is formatted clearly and concisely.

Extracting Specific Node Status Information

Delving deeper into Kubernetes node status reveals critical operational details, enabling proactive maintenance and optimized resource allocation. Understanding metrics like CPU utilization, memory pressure, and disk space is paramount for identifying potential bottlenecks and ensuring optimal system performance. This section details methods for extracting this vital information, outlining a structured approach to parsing the data and handling potential errors.

Retrieving Specific Node Attributes

Kubernetes provides comprehensive node status information, encompassing various attributes. Crucially, this data extends beyond the basic ‘Ready’ status, offering insights into resource consumption. Extracting specific metrics like CPU utilization, memory usage, and disk space is essential for understanding node health and capacity.

Parsing Node Status Data

A structured approach to parsing the returned node status data is vital for efficient data extraction and analysis. This involves careful consideration of the API response format and defining clear data structures to represent the extracted information. The `client-go` library, commonly used in Go for interacting with Kubernetes APIs, offers a well-structured way to decode the JSON response.

This allows developers to access and manipulate specific fields from the API response efficiently, ensuring the data is properly processed.

Error Handling during API Calls

Robust error handling is crucial for any application interacting with external systems, particularly when dealing with the Kubernetes API. Potential errors can arise from network issues, API server problems, or issues with the node itself. Implementing error handling mechanisms prevents application crashes and allows for graceful recovery from unexpected situations.

Extracting Node Status Information Function

The function below demonstrates a structured method for extracting a specific node’s status information, including crucial metrics like CPU utilization, memory usage, and disk space.“`Gopackage mainimport ( “context” “fmt” v1 “k8s.io/apimachinery/pkg/apis/meta/v1” “k8s.io/client-go/kubernetes” “k8s.io/client-go/rest”)func getNodeStatus(clientset

kubernetes.Clientset, nodeName string) (*v1.Node, error)

// Get the node by name node, err := clientset.CoreV1().Nodes().Get(context.TODO(), nodeName, v1.GetOptions) if err != nil return nil, fmt.Errorf(“failed to get node: %w”, err) return node, nil“`This function retrieves a node’s status by name, utilizing the `clientset`. Error handling is incorporated to address potential API issues, ensuring reliable data retrieval.

Filtering Nodes Based on Criteria

Filtering nodes based on specific criteria, such as labels, is a common requirement for managing and targeting particular nodes. Labels provide a powerful mechanism for grouping and targeting nodes, allowing for selective actions based on specific properties. The following example demonstrates filtering nodes based on the `tier` label.“`Go// … (previous code) …func filterNodesByLabel(clientset

kubernetes.Clientset, labelKey, labelValue string) ([]*v1.Node, error)

nodes, err := clientset.CoreV1().Nodes().List(context.TODO(), v1.ListOptionsLabelSelector: labelKey + “=” + labelValue) if err != nil return nil, fmt.Errorf(“failed to list nodes: %w”, err) return nodes.Items, nil“`This function filters nodes based on a specific label and returns a list of matching nodes. Error handling is included to address potential issues.

Handling Errors and Resilience

Kubernetes, a complex orchestration system, often faces transient network issues, API timeouts, or resource exhaustion. Robust error handling and resilience are crucial to ensure reliable node status retrieval. A system designed for fault tolerance will minimize disruptions and maintain consistent operation, adhering to the principles of high availability.The intricate dance of Kubernetes involves numerous components interacting, leading to potential failures.

Successfully retrieving node status demands a proactive approach to error management, guaranteeing a continuous flow of data despite transient glitches. This requires a careful examination of possible errors and the application of appropriate strategies to mitigate their impact.

Common Errors in Node Status Retrieval

Retrieving node status in Kubernetes involves interacting with the Kubernetes API. Potential errors include network connectivity problems, API server overload, temporary node unavailability, and authentication failures. Understanding the nature of these errors is essential for crafting appropriate responses. For instance, network timeouts often indicate temporary connectivity issues, while API server errors may signify temporary overload or configuration problems.

Error Handling Best Practices

Implementing robust error handling is crucial for maintaining application stability. The strategy should encompass comprehensive checks for network connectivity, API responses, and node availability. This approach ensures graceful degradation in case of failures.

Retry Mechanisms and Exponential Backoff

Retry mechanisms are essential for handling transient failures. An exponential backoff strategy, where the retry interval increases exponentially after each failed attempt, is commonly used. This strategy prioritizes stability over immediate retrieval, preventing overwhelming the API server with repeated requests during periods of instability.

Code Example: Implementing Retry Logic

“`Gopackage mainimport ( “context” “fmt” “time” “k8s.io/apimachinery/pkg/api/errors” metav1 “k8s.io/apimachinery/pkg/apis/meta/v1” “k8s.io/client-go/kubernetes” “k8s.io/client-go/rest”)func getNodeStatus(clientset

kubernetes.Clientset, nodeName string) (*corev1.Node, error)

var node

corev1.Node

var err error maxRetries := 3 retryInterval := 1

time.Second

for i := 0; i < maxRetries; i++ node, err = clientset.CoreV1().Nodes().Get(context.TODO(), nodeName, metav1.GetOptions) if err == nil return node, nil if errors.IsServiceUnavailable(err) fmt.Printf("Service unavailable, retrying in %v...\n", retryInterval) time.Sleep(retryInterval) retryInterval -= 2 else if errors.IsNotFound(err) return nil, fmt.Errorf("node not found: %s", nodeName) else return nil, fmt.Errorf("failed to get node status: %w", err) return nil, fmt.Errorf("failed to retrieve node status after multiple retries") ``` This example demonstrates a function `getNodeStatus` that retrieves node status using the Kubernetes API. The `for` loop implements the retry mechanism, handling service unavailable errors and adjusting the retry interval using exponential backoff. Critically, it distinguishes between errors like `ServiceUnavailable` which warrant a retry and errors like `NotFound` or others that indicate a more fundamental issue.

Handling Network Issues and Transient Failures

Network interruptions or transient failures are common occurrences in distributed systems. Strategies to handle such disruptions include timeouts for API calls, implementing circuit breakers to prevent cascading failures, and monitoring network connectivity. The code example includes checks for `ServiceUnavailable` errors, allowing the program to adapt to transient problems.

Efficient Node Status Monitoring

Kubernetes node health is crucial for cluster stability and application availability. Continuous monitoring ensures rapid detection of issues, enabling proactive maintenance and minimizing downtime. This proactive approach is analogous to the early warning systems employed in seismology, which, by constantly monitoring seismic activity, can predict and mitigate the impact of potential earthquakes.Efficient monitoring requires a structured approach, leveraging the power of concurrency to prevent delays and ensure timely responses to changing node conditions.

The methodology described leverages principles of asynchronous operation to ensure responsive feedback loops, mirroring the real-time data acquisition systems used in modern astronomical observatories.

Continuous Monitoring Structure

Continuous monitoring of Kubernetes nodes involves a structured approach to periodically check node status and react to changes. This structure must include a mechanism for handling potential errors and ensuring resilience in the face of transient failures. The design is analogous to a complex biological system where various organs and subsystems continuously monitor and adjust to maintain homeostasis.

Importance of Goroutines and Channels

Goroutines and channels are indispensable for asynchronous operations in Go. Goroutines enable concurrent execution, allowing multiple status checks to happen simultaneously without blocking the main thread. This is crucial for responsiveness and scalability, as it prevents the system from becoming bogged down during checks. This parallel processing is similar to the way a human brain processes multiple sensory inputs simultaneously, allowing for quick reactions and decision-making.

Channels facilitate communication between goroutines, enabling data exchange and status updates, mirroring the way neurons in the nervous system transmit signals for coordinated action.

Periodic Node Status Check Function

A dedicated function is essential for periodic node status checks. This function should leverage goroutines and channels to handle the asynchronous nature of the checks, minimizing delays and maximizing responsiveness. This function should follow a structured pattern, checking node status at defined intervals and reporting any observed issues. This periodic checking process mirrors the automated monitoring systems used in large-scale manufacturing, where sensors continuously monitor equipment for potential problems.

Comparison of Polling Approaches

| Approach | Advantages | Disadvantages |

|---|---|---|

| Periodic checks | Simple implementation, straightforward to understand and implement. | Can be inefficient if the node status doesn’t change frequently. It can lead to unnecessary overhead and potential delays. |

| Event-driven | Real-time updates, reacting immediately to node status changes. Minimizes the overhead associated with unnecessary checks. | More complex implementation, requiring careful handling of events and potential race conditions. Setting up and managing event listeners can be complex. |

This table demonstrates a comparison of the common approaches to monitoring node status. Periodic checks offer simplicity, but they can be wasteful if node status remains unchanged. Event-driven approaches, while more intricate, provide immediate updates and reduce overhead in stable conditions. This comparison is analogous to choosing between a manual inspection of a machine and an automated sensor system.

Output Formatting and Presentation

The retrieved Kubernetes node status data, brimming with intricate details, needs meticulous presentation for effective interpretation and actionable insights. Proper formatting is crucial for human comprehension and automated analysis. Visualizing this data in a user-friendly manner enables swift identification of problematic nodes, aiding in proactive maintenance and optimized resource utilization. The variety of data necessitates a tailored approach to presentation, considering the specific needs of the intended audience and the context of the analysis.

Different Output Formats

Various formats exist for presenting node status data, each with its own strengths and weaknesses. Choosing the right format hinges on the intended use case and the desired level of detail. JSON, a widely used format for structured data exchange, offers a compact and machine-readable representation. Structured text, with its human-readable nature, proves beneficial for quick visual scanning and analysis.

Formatted tables excel at presenting tabular data, enabling users to quickly compare node attributes.

Console Output Formatting

For interactive console output, a well-organized table format is highly effective. A table displaying columns for node name, status, CPU utilization, memory usage, and disk space can provide a comprehensive overview of the node’s health. Color-coding can be employed to highlight critical conditions, such as nodes with high CPU utilization or low disk space, enhancing the visual impact and facilitating immediate identification of potential issues.

Examples of color-coding include red for critical issues, yellow for warning conditions, and green for healthy states.

Dashboard Integration

Dashboard integration demands a format that harmonizes with the existing dashboard framework. JSON or a formatted text format, easily parsed by dashboarding tools, is suitable for this purpose. Visualization tools within the dashboard can be leveraged to display trends in node metrics, such as CPU usage over time, facilitating proactive monitoring and issue resolution. Dynamic graphs showing CPU usage, memory utilization, and network traffic can offer a real-time view of node performance, allowing for immediate identification of potential bottlenecks.

This enables efficient resource management and timely intervention.

User-Friendly Table Presentation

A well-designed table presents the node status data in a structured format. Headers for node name, status, CPU usage (%), memory usage (%), disk space (%), and network bandwidth (Mbps) provide clear labeling of the data. Color-coding, as previously mentioned, enhances visual clarity, with distinct colors signifying critical, warning, and healthy states. This structured format facilitates easy comparison across multiple nodes, quickly highlighting potential issues.

An example is shown below:

| Node Name | Status | CPU Usage (%) | Memory Usage (%) | Disk Space (%) |

|---|---|---|---|---|

| node1 | Healthy | 25 | 40 | 80 |

| node2 | Warning | 85 | 90 | 20 |

| node3 | Critical | 100 | 95 | 5 |

Example Use Cases

Kubernetes node status monitoring is crucial for maintaining a healthy and performing cluster. Understanding the status of individual nodes provides insights into resource utilization, potential failures, and overall cluster health. This allows for proactive management and efficient troubleshooting, minimizing downtime and maximizing application availability. The ability to dynamically adapt to changing conditions, such as node failures or upgrades, is a key aspect of a robust Kubernetes deployment.The diverse applications of node status retrieval extend far beyond basic monitoring.

From automated scaling to predictive maintenance, the insights gleaned from node status data empowers administrators with a powerful toolset for optimizing cluster performance. This allows for more sophisticated automation, predictive maintenance, and proactive problem resolution, ensuring continuous service availability.

Monitoring Node Health and Performance

Node status information is essential for monitoring the health and performance of Kubernetes nodes. This involves tracking CPU usage, memory consumption, disk space, network connectivity, and other key metrics. Real-time monitoring enables administrators to identify performance bottlenecks and potential issues early on, before they escalate into major problems.

- CPU Utilization: High CPU utilization on a node can indicate resource contention or a misconfigured application. This data is vital for capacity planning and identifying resource-intensive tasks. Monitoring this metric enables timely intervention and prevents performance degradation.

- Memory Usage: Elevated memory consumption might signal memory leaks or application issues. This metric is crucial for identifying potential OOM (Out-of-Memory) conditions and ensuring sufficient memory for application processes.

- Disk Space: Low disk space on a node can lead to pod failures. Regular monitoring of disk space utilization allows for proactive adjustments to storage capacity or the removal of unnecessary data.

- Network Connectivity: Problems with network connectivity can hinder communication between pods and services. Monitoring network connectivity helps identify and resolve network issues affecting node functionality.

Alerting on Critical Conditions

Monitoring node status data allows for the implementation of alerts for critical conditions. Alerts can be configured to trigger based on specific thresholds, such as high CPU utilization, low memory, or network outages. This enables administrators to react quickly to problems, preventing service disruptions and maximizing application uptime.

- Automated Response Systems: Alerting systems can trigger automated responses, such as scaling up resources or restarting problematic services. This automated response mechanism allows for faster resolution and prevents escalation of minor issues.

- Human Intervention: Alerts can notify administrators of potential problems, prompting timely human intervention to resolve issues promptly. This provides a crucial layer of protection against service interruptions.

Centralized Logging and Reporting

A function for reporting node status to a central logging system is critical for efficient troubleshooting and analysis. This central repository consolidates logs from all nodes, allowing for comprehensive visibility into the cluster’s health.

- Data Aggregation: A centralized logging system aggregates node status data from various sources, enabling a holistic view of the cluster’s overall health.

- Trend Analysis: Historical data from the central log allows for trend analysis, identifying patterns and anomalies in node behavior over time.

Integration with Other Systems

Integration with other systems, such as Grafana dashboards, provides a comprehensive visualization of Kubernetes node status. Grafana dashboards allow for the display of key metrics in graphical format, enabling administrators to quickly assess the overall health of the cluster.

- Visualization: Grafana dashboards offer real-time visualizations of node status metrics, enabling administrators to quickly identify and address potential problems.

- Data Correlation: Integrating with other systems allows for correlation of node status data with other metrics, providing a more comprehensive understanding of cluster behavior.

Example Application: Node Status Monitoring

A sample application can monitor node status and trigger alerts based on predefined thresholds. The application polls nodes, gathers status information, and evaluates metrics against predefined thresholds.“`// (Example pseudo-code)func monitorNodeStatus(node

v1.Node)

if node.Status.Allocatable.CPU < threshold sendAlert("Low CPU Allocatable on node: " + node.Name) ``` This demonstrates a simplified example; a production-ready application would incorporate more robust error handling and alerting mechanisms.

Closing Summary: How To Get Status Of Kubernates Node Using Golang

In conclusion, this guide provides a practical and detailed approach to obtaining Kubernetes node status information using Go. By leveraging the Kubernetes API and `client-go`, developers can build robust applications for monitoring, alerting, and automating tasks related to cluster health. The provided examples, error handling strategies, and monitoring techniques empower developers to build efficient and reliable systems for managing Kubernetes clusters.

FAQ

Q: What are the common errors when retrieving node status?

A: Common errors include network issues, authentication problems, API rate limiting, and transient cluster failures. Robust error handling, including retries with exponential backoff, is crucial for reliable operation.

Q: How can I monitor node status continuously?

A: Continuous monitoring is achieved using goroutines and channels for asynchronous operations. Periodic checks, combined with event-driven approaches, provide a comprehensive solution. The selection of the appropriate approach depends on the desired level of responsiveness.

Q: What are the different formats for presenting node status data?

A: Node status data can be presented in various formats, including JSON, structured text, and formatted tables. The chosen format should be user-friendly and tailored to the specific use case, considering factors like console output, dashboards, or integration with other systems.

Q: How do I filter nodes based on specific criteria?

A: Nodes can be filtered based on labels using the Kubernetes API. The `client-go` library provides mechanisms to query nodes based on specific labels, enabling targeted monitoring and management.